Nächste Seite: Discussion and Future Plans

Aufwärts: ersa2002

Vorherige Seite: Results

Computational Issues

A metropolitan region can consist of 10 million or more inhabitants

which causes considerable demands on computational performance. This

is made worse by the relaxation iterations. And in contrast to

simulations in the natural sciences, traffic particles ( travelers,

vehicles) have internal intelligence. As pointed out in the

introduction, this internal intelligence translates into rule-based

code, which does not run well on traditional supercomputers

(e.g. Cray) but runs well on modern workstation architectures. This

makes traffic simulations ideally suited for clusters of PCs, also

called Beowulf clusters. We use domain decomposition, that is, each

CPU obtains a patch (``domain'') of the geographical region.

Information and vehicles between the domains are exchanged via message

passing using MPI (Message Passing Interface,

www-unix.mcs.anl.gov/mpi).

travelers,

vehicles) have internal intelligence. As pointed out in the

introduction, this internal intelligence translates into rule-based

code, which does not run well on traditional supercomputers

(e.g. Cray) but runs well on modern workstation architectures. This

makes traffic simulations ideally suited for clusters of PCs, also

called Beowulf clusters. We use domain decomposition, that is, each

CPU obtains a patch (``domain'') of the geographical region.

Information and vehicles between the domains are exchanged via message

passing using MPI (Message Passing Interface,

www-unix.mcs.anl.gov/mpi).

Table 1 shows computing speed for the queue simulation

run on three hours of the Gotthard scenario described in

Sect. 3.2. The table lists elapsed time (or

wall clock time), real-time ratio, and speedup for the same simulation

run on different numbers of CPUs using a standard 100 Mbit Ethernet

interface between the computers. The real-time ratio (RTR) is how

much faster than reality the simulation is. A RTR of 100 means that

one simulates 100 seconds of the traffic scenario in one second of

wall clock time. Speedup and RTR are related, in that speedup

compares the wall clock time of a multiple-CPU simulation with that of

the single-CPU simulation, where as RTR is comparing running time to

the simulated time. The simulation scales fairly well for this

scenario size and this computing architecture up to about 64 CPUs.

Above 80 CPUs, performance does not increase further.

The bottleneck to faster computing speeds is the latency of the

Ethernet interface (Nagel and Rickert, 2001; Rickert and Nagel, 2001), which is

about 0.5-1 msec per message. Since we have in the average six

neighbors per domain meaning six message sends per time step, running

100 times faster than real time means that between

and

and

per second corresponding to between 30%

and 60% of the computing time is used up by message passing. As

usual, one could run larger scenarios at the same computational speed

when using more CPUs. However, running the same scenarios faster by

adding more CPUs demands a low latency communication network, such as

Myrinet, or a supercomputer.

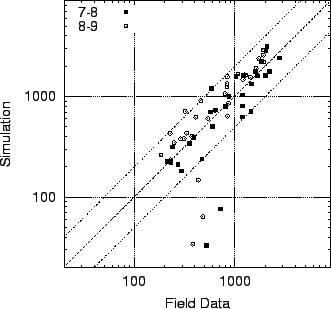

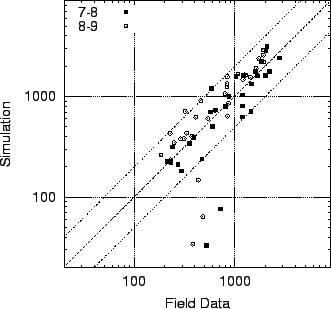

Fig. 6 compares the actual experimental RTR between

the simulation run over a 100 Mbit Ethernet interface, and a Myrinet

interface, with all else being equal. Since Myrinet has a lower

latency than Ethernet, the performance is indeed increased as

expected.

Systematic computational speed

predictions for different types of computer architectures can be found

in Rickert and Nagel (2001) and Nagel and Rickert (2001).

per second corresponding to between 30%

and 60% of the computing time is used up by message passing. As

usual, one could run larger scenarios at the same computational speed

when using more CPUs. However, running the same scenarios faster by

adding more CPUs demands a low latency communication network, such as

Myrinet, or a supercomputer.

Fig. 6 compares the actual experimental RTR between

the simulation run over a 100 Mbit Ethernet interface, and a Myrinet

interface, with all else being equal. Since Myrinet has a lower

latency than Ethernet, the performance is indeed increased as

expected.

Systematic computational speed

predictions for different types of computer architectures can be found

in Rickert and Nagel (2001) and Nagel and Rickert (2001).

Tabelle 1:

Computational performance of the queue micro-simulation on a

Beowulf Pentium cluster. The first column indicates the number of

processors used. The second column gives the number of seconds taken

to run the first 3 hours of the Gotthard scenario (iteration 49). The

third column gives the real time ratio (RTR), which is how much faster

than reality the simulation is. A RTR of 100 means that one simulates

100 seconds of the traffic scenario in one second of wall clock

time. The fourth column is the speedup, the ratio of the execution

time of the simulation to that of a single-processor execution.

| Number of Processors |

Time Elapsed |

Real Time Ratio |

Speedup |

| 1 |

552 |

20 |

1.00 |

| 4 |

272.7 |

40 |

2.02 |

| 8 |

179 |

60 |

3.08 |

| 16 |

124 |

87 |

4.45 |

| 32 |

82.4 |

131 |

6.70 |

| 48 |

74.4 |

145 |

7.42 |

| 64 |

65 |

166 |

8.49 |

| 80 |

58.4 |

185 |

9.45 |

| 96 |

55.6 |

194 |

9.93 |

| 108 |

58.2 |

186 |

9.48 |

| 125 |

59.2 |

182 |

9.32 |

|

Abbildung 6:

Computational performance of the queue micro-simulation on a

Beowulf Pentium cluster using Ethernet and Myrinet network

interfaces.

|

|

Nächste Seite: Discussion and Future Plans

Aufwärts: ersa2002

Vorherige Seite: Results

Kai Nagel

2002-05-31

travelers,

vehicles) have internal intelligence. As pointed out in the

introduction, this internal intelligence translates into rule-based

code, which does not run well on traditional supercomputers

(e.g. Cray) but runs well on modern workstation architectures. This

makes traffic simulations ideally suited for clusters of PCs, also

called Beowulf clusters. We use domain decomposition, that is, each

CPU obtains a patch (``domain'') of the geographical region.

Information and vehicles between the domains are exchanged via message

passing using MPI (Message Passing Interface,

www-unix.mcs.anl.gov/mpi).

travelers,

vehicles) have internal intelligence. As pointed out in the

introduction, this internal intelligence translates into rule-based

code, which does not run well on traditional supercomputers

(e.g. Cray) but runs well on modern workstation architectures. This

makes traffic simulations ideally suited for clusters of PCs, also

called Beowulf clusters. We use domain decomposition, that is, each

CPU obtains a patch (``domain'') of the geographical region.

Information and vehicles between the domains are exchanged via message

passing using MPI (Message Passing Interface,

www-unix.mcs.anl.gov/mpi).